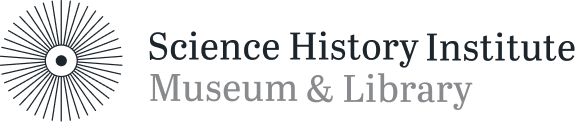

In this episode of The Disappearing Spoon, host Sam Kean explains how what Albert Einstein considered a huge mistake helped establish one of science’s greatest fields. This is the second in a three-part series on legendary physicists and their dumbest mistakes.

Listen to Part 1: Isaac Newton >>

Listen to Part 3: Stephen Hawking >>

About The Disappearing Spoon

The Science History Institute has teamed up with New York Times best-selling author Sam Kean to bring a second history of science podcast to our listeners. The Disappearing Spoon tells little-known stories from our scientific past—from the shocking way the smallpox vaccine was transported around the world to why we don’t have a birth control pill for men. These topsy-turvy science tales, some of which have never made it into history books, are surprisingly powerful and insightful.

Credits

Host: Sam Kean

Senior Producer: Mariel Carr

Producer: Rigoberto Hernandez

Associate Producer: Padmini Raghunath

Audio Engineer: Jonathan Pfeffer

Transcript

This is part two in a three-part series on legendary physicists and their biggest blunders. Today, we tackle Albert Einstein.

Albert Einstein was a physics rebel. He overthrew centuries of thinking about gravity and revolutionized our understanding of space and time. But there were certain things that Einstein was kinda fussy about preserving. Like the size of the universe.

In Einstein’s day, most scientists believed the universe was constant in size. It never got any bigger or any smaller, and never really changed at all. This was called the steady-state view of the cosmos.

And Einstein liked that view. It pleased him to think of the cosmos as never-changing—having no beginning and no end. It just was, and always shall be.

The problem was, Einstein’s own theories disagreed with him. General relativity deals with gravity, and gravity is an attractive force. If you have a big cloud of dust in space, the dust will eventually contract together and crunch down into a central mass.

The same basic idea applies to the universe. All the stars and galaxies are spread out now, but in theory they should eventually crunch back together as gravity pulls them in. That’s not a steady-state cosmos. That’s a crushed cosmos, and Einstein didn’t like it.

So, he fudged things. In 1917, he took one of his General Relativity equations and added another term to it. He named this term lambda, after the Greek letter. This lambda fudge factor was supposedly a repulsive force that pushed matter apart. It counteracted gravity, and kept the universe from crunching in on itself.

Now, there was no good reason for adding this repulsive force. And Einstein really did fudge things here. He just wrote down a plus sign and then added lambda times another factor at the end of his equation—kind of like how we’d scribble, “oh, yeah, uh, ‘+ eggs’” to a grocery list. He just made it up!

Other scientists didn’t know what to make of this lambda fudge factor. Most of them ignored it. Others even opposed it. For instance, there was Georges Lemaître [“zhorge le-MAH-ter”], a Belgian priest-slash-physicist. And yes, you heard that right: a priest-slash-physicist.

Lemaître came up with the idea that maybe the universe was far smaller in the past. Perhaps it started as a tiny ball or something, and it’s been getting steadily bigger since then.

Lemaître published this idea in 1927, and it wasn’t taken very seriously. At least not until 1929. That year, an American astronomer named Edwin Hubble found that the old steady-state view of the cosmos—where it was static and always one size—was wrong. Hubble found that the universe was actually expanding—getting bigger.

Which meant the priest-slash-physicist was right: if the universe was getting bigger now, it must have been smaller in the past. And if you run things back far enough, the universe must have started off very tiny—a dense ball of matter that then exploded outward and gave rise to everything around us. We now call this idea the Big Bang, and virtually every physicist in the world subscribes to it.

All of which meant that Einstein was left with egg on his face. He’d added the lambda fudge factor to make the universe stable, so it was always one size. But the universe wasn’t always the same size. It was once much smaller, and nowadays it’s getting bigger. Lambda was totally unnecessary. Einstein eventually scratched it off the end of his equation, and he later called it “my biggest blunder”—the biggest mistake of his career.

But it wasn’t. In fact, it might have been the best idea he ever had.

From the Science History Institute this is Sam Kean and the Disappearing Spoon—a topsy-turvy science-y history podcast. Where footnotes become the real story.

Flash-forward to 1998. That year, some astronomers were looking at a few very bright but very distant stars, to see how quickly those stars were moving away from Earth. Again, Edwin Hubble found that we live in an expanding universe. Stuff is still hurling outward from the initial Big Bang, so most every star you see is slowly getting more distant from us.

But in 1998, these astronomers found something strange. Like everyone else, they assumed that other stars were moving away from Earth at a steady rate—a constant speed. But, no.

The stars they examined were actually accelerating away from us. The speed was changing over time, getting bigger and bigger each year. And it wasn’t just these stars. It turns out that all distant stars and galaxies are accelerating away from Earth.

No one expected this, but the findings were pretty solid. Several people in fact won a Nobel Prize for discovering this acceleration.

This discovery also forced people to reconsider Einstein’s lambda fudge factor. Again, people thought that the universe was expanding because of the momentum from the Big Bang. But that momentum would explain only expansion at a steady speed. If the universe was accelerating, that Big Bang momentum wasn’t enough. If it’s accelerating, something must be actively pushing stars apart.

In other words, the astronomers had discovered a repulsive force, just like Einstein hoped. The lambda fudge factor existed after all. And you know, everyone had a good laugh over this. Einstein’s self-proclaimed biggest blunder wasn’t a blunder at all. Even when Einstein was wrong, he was right! That’s genius.

But remember, Einstein had no idea what the lambda repulsive force was; he just wrote it down. Now that lambda was real, scientists had to take a harder look and figure out its true nature.

Lots of suggestions arose. But the most popular idea linked lambda with something called the “energy density of the vacuum.” Now, that sounds a bit odd. What’s the energy density of the vacuum?

Let’s break it down. Density you’re probably familiar with. If something is dense, there’s more stuff packed inside it for a given volume. Usually we think about density in terms of matter, of atoms and things. But energy can have density, too.

Some regions, like deep space, have very little energy, so they’re not energy-dense. Other regions, like inside a star, have lots of energy, so they’re quite energy-dense. Lambda is just a measure of how much energy density there is in a vacuum.

But that might sound a bit weird, too. Does a vacuum, empty space, have energy stored in it?

Yes, it does. Imagine you had a strong microscope—really strong. So strong you could see subatomic particles. Now imagine you were looking at what seemed like empty space—space with nothing in it.

Well, it wouldn’t remain empty for long. Every so often you’d see <BLIP> two particles appear out of nowhere. They just poof into existence, as if a magician conjured them up. <BLIP>

Now, these magically appearing particles don’t last long. Technically, they’re virtual particles, and have extremely short lifespans. But they do exist.

And the reason they exist is that empty space has energy stored in it. As Einstein knew, E=mc2, so you can convert energy into matter like particles. And the raw material from which virtual particles arise is the energy of empty space.

Now, I realize how strange this all sounds, but it’s very well established science. Even empty space—even a vacuum—has energy stored inside it.

And the key point here is that vacuum energy, like all energy, can do work. For instance, the work of pushing stars and galaxies apart. That’s why many scientists link the energy density of the vacuum to Einstein’s repulsive lambda force.

So it all fits together. We know our universe is expanding at an accelerating clip. We also know that all space is permeated by a murky but real energy known as vacuum energy. Vacuum energy can do work, and it just makes sense that vacuum energy would be doing the work of pushing stars apart.

There’s just one problem.

Astronomers can measure how quickly stars are accelerating away from us. And they can see that this acceleration is really, really tiny. So the value for the vacuum energy must be tiny, too. In fact, when you crunch the numbers it’s ridiculously tiny—something like 10 to the minus 52nd power. That’s a decimal point followed by over fifty zeroes, then a measly 1.

To put that number in perspective, if you took the mass of one single atom, and then divided that by the mass of the whole entire Earth, you’d get roughly that same number. So again, based on the values astronomers can measure, lambda is super-tiny.

Or is it? You’ve probably heard of the field called quantum mechanics, which deals with tiny things—electrons, photons of light, stuff like that. And it’s hard to overstate how good quantum mechanics is. It’s the single more accurate field in the history of science. I’m not exaggerating. It is rock-solid stuff.

Well, quantum mechanics happens to make certain predictions about the vacuum energy. For instance, that every single particle in the universe—every electron, every proton, every dang quark—should add to the vacuum energy. So should all those ghost particles I mentioned before that blip into and out of existence for fractions of a second. They add to the vacuum energy, too.

And given all the gajillions of gajillions of particles in the universe, quantum mechanics suggests that the vacuum energy should be huge—utterly gargantuan. The predicted value is something like 10 to the 69th power. That’s a 1 followed by 69 zeros. All the atoms in the whole Earth only number something like 10 to the 50th power. Ten to the 69th would be all the atoms in 10 billion billion Earths.

So there’s the dilemma. According to what we can measure with the accelerating stars, the vacuum energy seems tiny, tiny, tiny—10 to minus 52. But according to what we can predict with the most accurate scientific theory in history, the vacuum energy must be huge, huge, huge, around 10 to the 69th.

Overall, that’s a discrepancy of 10 raised to the 121st power. If you took all the atoms in the universe, that’s only around 10 to the 80th. That means this discrepancy is a thousand billion billion billion billion times bigger than the number of atoms of universe. That’s big.

So you can see why this discrepancy has been called “the worst prediction in the history of science.” In fact, it’s almost impressive to get something wrong by a thousand billion billion billion billion times more than the number of atoms in the universe. But that’s the miracle of modern science.

So, how do we resolve this problem? No one knows. It’s a big huge glaring embarrassment that we simply can’t resolve right now.

But honestly, that embarrassment isn’t necessarily a bad thing. Because something similar happened before. And when scientists resolved that earlier embarrassment, it led to one of the most profound revolutions in the history of science.

To understand the way forward, we’ll have to jump back in time again. Way back in the late 1800s, scientists faced something called the ultraviolet catastrophe. It arose from work on heat.

Imagine you have something that’s hot, like a potbelly stove with a glowing fire inside. It’s giving off heat-energy, in the form of light rays. And those light rays come in a variety of types. Some rays are infrared light. Some are visible light, the reds and oranges and yellows. And so on.

Now, in the late 1800s, a few scientists decided they wanted to be more precise here. Instead of just saying that “some” of the emitted light was infrared and “some” was orange or yellow, they wanted to put a number on things. At 1000 degrees, exactly how much of the energy given off was from infrared light? How much from orange or yellow? And how did those numbers change at 2000 degrees or 3000 degrees?

Eventually, the scientists came up with an equation. You plugged in the temperature, and the equation spit out exactly how much infrared would be emitted, or how much orange or yellow. And for most types of light, this equation worked great.

But things got funny when you started shifting from visible light into the ultraviolet realm. In short, the equation started making nonsensical predictions. Without getting into too much technical detail, the equation basically predicted that your potbelly stove should be emitting loads of ultraviolet light—dangerous amounts of it. And not just ultraviolet light, but high-energy x-rays as well, and even gamma rays, the type produced in atomic bombs

Which was obvious nonsense. Stoves don’t emit x-rays and gamma rays. But no one could tell what was wrong with the equation. And again, it worked quite well—near perfectly—for low-energy light.

This state of affairs, with absurd predictions in the ultraviolet realm and beyond, was dubbed the ultraviolet catastrophe. It was every bit as big an embarrassment then as the vacuum-energy density discrepancy is now.

In desperation, the scientists back then started fiddling around with the equation and fudging things, kind of like Einstein did. But whereas Einstein just slapped a “plus lambda” on, the work these other scientists did was much, much more involved and complicated. They wracked their brains for years.

They finally tried something ridiculous. Again, the fundamental problem involved how much heat-energy something like a stove would give off. Well, they proposed that this energy came in discrete packets. As an analogy, this was like saying that the energy emitted could only have values that were whole numbers. So you could have a value of 1 or 2 or 3, but never 2.4 or 3½. Only discrete, whole numbers were allowed.

Now, as far as anyone knew, there was no basis in reality for this. They just wanted to make the equation work and resolve the stupid catastrophe. And sure enough, when you make that assumption, that energy came in discrete packets, voilà—the ultraviolet catastrophe disappeared. The equations gave sensible answers where stoves were no longer seething cauldrons of x-rays.

But then something magical happened. In completely separate work, people started experimenting with atoms, and trying to figure out how individual atoms absorbed and emitted energy. And lo and behold, they found that—holy crap—energy did actually come in discrete packets. This assumption, which at first had no basis in reality, was found to be a fundamental feature of reality.

These discrete packets of energy are now called “quanta.” And this discovery of discrete quanta eventually gave rise to the field of quantum mechanics. In other words, the need to resolve the ultraviolet catastrophe led to the founding of one of the most successful fields in all of science.

So maybe something similar will happen with the vacuum-energy catastrophe. Maybe there’s some scientist out there right now fudging things and adding random Greek letters in sheer desperation—and watching in delight as something extraordinary happens. It might even seem like a mistake at first. But as Einstein showed us, sometimes big blunders are the only way forward.

That’s it for today, but be sure to check out next week’s episode on the blunders of Stephen Hawking.