Making life artificially wasn’t as big a deal for the ancients as it is for us. Anyone was supposed to be able to do it with the right recipe, just like baking bread. The Roman poet Virgil described a method for making synthetic bees, a practice known as bougonia, which involved beating a poor calf to death, blocking its nose and mouth, and leaving the carcass on a bed of thyme and cinnamon sticks. “Creatures fashioned wonderfully appear,” he wrote, “first void of limbs, but soon awhir with wings.”

This was, of course, simply an expression of the general belief in spontaneous generation: the idea that living things might arise from nothing within a fertile matrix of decaying matter. Roughly 300 years earlier, Aristotle, in his book On the Generation of Animals, explained how this process yielded vermin, such as insects and mice. No one doubted it was possible, and no one feared it either (apart from the inconvenience); one wasn’t “playing God” by making new life this way.

The furor that has sometimes accompanied the new science of synthetic biology—the attempt to reengineer living organisms as if they were machines for us to tinker with, or even to build them from scratch from the component parts—stems from a decidedly modern construct, a “reverence for life.” In the past, fears about this kind of technological hubris were reserved mostly for proposals to make humans by artificial means—or as the Greeks would have said, by techne, art.

And if the idea of fabricating humans carried a whiff of the forbidden in antiquity, the reason had more to do with a general distrust of techne than with disapproval of people-making. There was a sense that machines—what the Greeks called mechanomai—were duplicitous contrivances that made things function contrary to nature. In Aristotle’s physics, heavy objects were innately apt to descend, whereas machines could make them do the opposite. Plato, Aristotle’s teacher, distrusted all art (whether painting or inventing) as a deceitful imitation of nature.

Because these ancient prejudices against techne still persisted in the 17th century, the English philosopher Francis Bacon felt it necessary to offer a staunch defense of the artificial. He wasn’t wholly successful, as the same distrust of the “artificial” and “synthetic” persists today. Some of the antipathy that synthetic biology has faced stems from this long-standing bias. But another deep-seated source of the distrust comes from late-medieval Christian theology, in which hubristic techne that tampered with human life risked invoking God’s displeasure. In the modern, secular version the sacredness of human life has expanded to encompass all of nature—and we hazard nature’s condemnation by daring to intervene in living things. That’s the kind of transgression now implied by one of the common objections to the pretensions of synthetic biology: it’s “unnatural.”

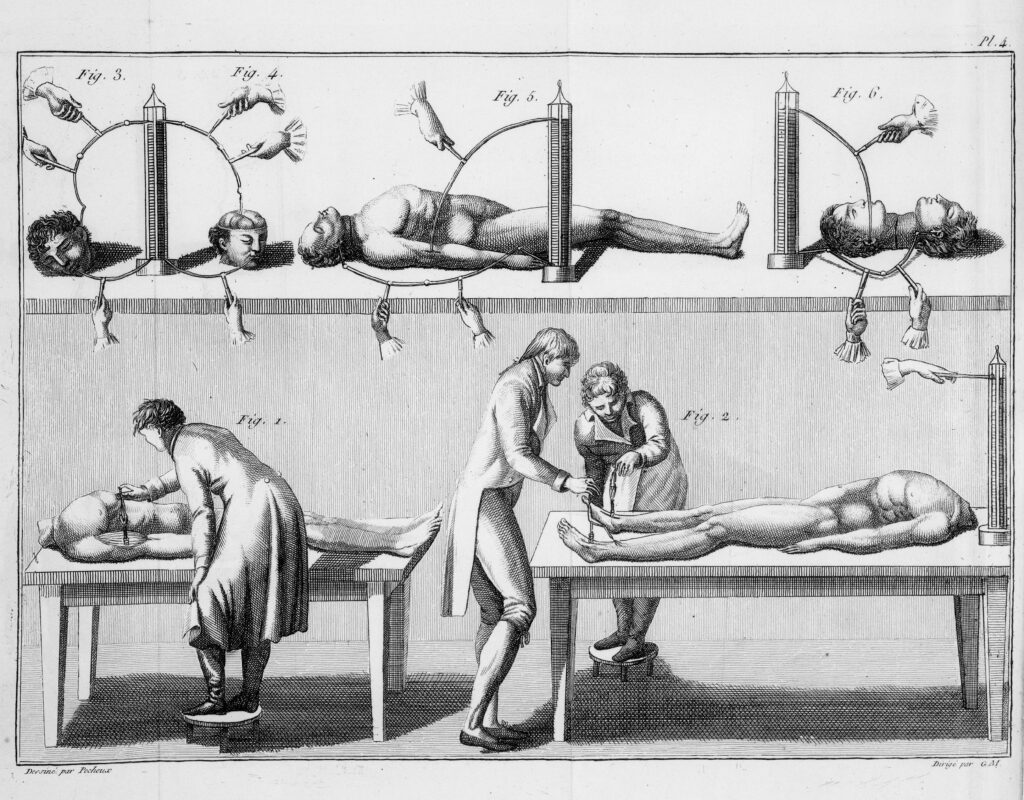

The Faustian Bargain

When theologians frowned on claims by alchemists that they could make an artificial being known as a homunculus, their reasoning wasn’t necessarily what we imagine. The charge wasn’t really one of playing God but rather of forcing God’s hand. For although we might be able to animate matter in this arcane way, only God could give it a soul. Would God then have to intervene to ensoul the homunculus? And would it, not being born of the line of Adam, be free of original sin and therefore have no need of Christ’s salvation?

These were the questions that troubled clerics. Perhaps they troubled the homunculus, too: the one created by Faust’s assistant Wagner in Goethe’s retelling of that old story yearns to be fully human, since only then can he escape the glass vessel in which he was made. “I myself desire to come of birth,” he says.

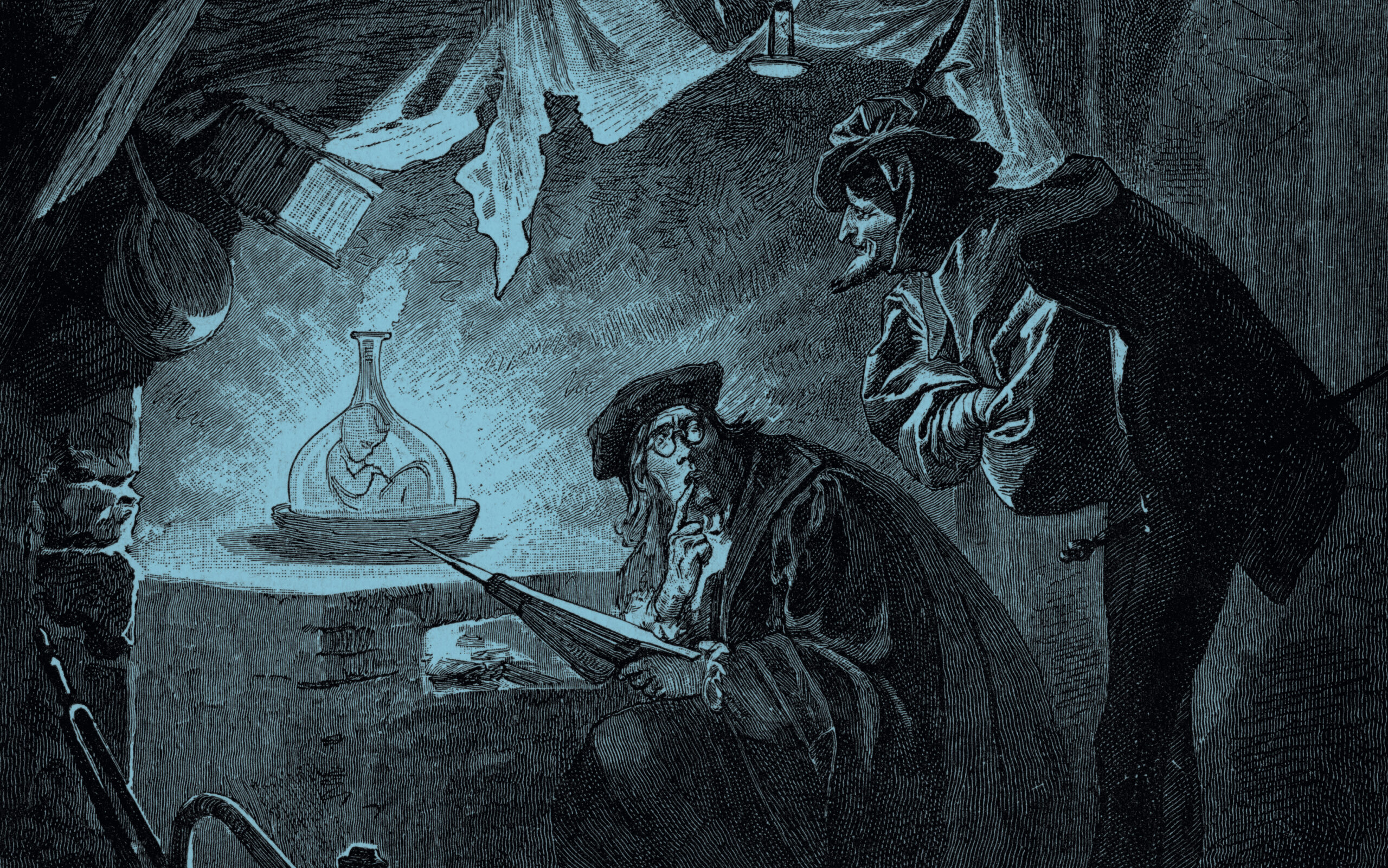

The legend of Faust, which harks back to the biblical magician Simon Magus who battled Saint Peter with magic, provides the touchstone for fears about scientists overstepping the mark and unwittingly unleashing destructive forces. Faust was, of course, the blueprint for the most famous cautionary tale of science dabbling in the creation of life: Mary Shelley’s Frankenstein. The novel, first published anonymously in 1818 with a foreword from Percy Shelley (Mary’s husband, who some suspected was the author), reinvents the Faust myth for the dawning age of science, drawing on the biology of Charles Darwin’s grandfather Erasmus, the chemistry of Humphry Davy, and the electrical physiology of Italy’s Luigi Galvani. Percy Shelley wrote that Erasmus Darwin’s speculations, expounded in such works as Zoonomia, or the Laws of Organic Life (1794), supported the idea that Victor Frankenstein’s reanimation of dead matter was “not of impossible occurrence.” And the popular science lecturer Adam Walker, a friend of chemist Joseph Priestley, wrote that Galvani’s experiments on electrical physiology had demonstrated the “relationship or affinity [of electricity] to the living principle.” Making life was in the air in the early 19th century, and Frankenstein looks, in retrospect, almost inevitable.

Mary Shelley’s doctor and his monstrous creation are now invoked as a knee-jerk response to all new scientific interventions in life. They featured prominently in media coverage of in vitro fertilization (IVF) and cloning (“The Frankenstein myth becomes reality” wrote the New York Times apropos IVF), genetic modification of plant crops (“Frankenfoods”), and now the creation of “synthetic life forms” by synthetic biology (“Frankenbugs”). The message is clear: technology thus labeled is something unnatural and dangerous, and warrants our firm disapproval.

Primal Slime

The prehistory of synthetic biology isn’t all Faustian. The apparent inclination of life to spring from lifeless matter stimulated the notion of an animating principle that was pervasive in the world, ready to quicken substances when the circumstances were clement. In this view a property that became known as the “vital force” inhered in the very constituents—the corpuscles, or molecules—of matter, and life appeared by degrees when enough of it accumulated. In his strange story D’Alembert’s Dream (1769) the French philosopher Denis Diderot compares the coherent movement of masses of molecule-like “living points” to a swarm of bees, of the kind that Virgil believed could be conjured from a dead cow. As Diderot’s contemporary, the French naturalist George-Louis Leclerc, the Comte de Buffon, put it,

Vitalism has often been derided by scientists today as a kind of prescientific superstition, but in fact this kind of provisional hypothesis is precisely what is needed for science to make progress on a difficult problem. By supposing that life was immanent in matter, early scientists were able to naturalize it and distinguish it from a mysterious, God-given agency, and so make it a proper object of scientific study.

The early chemists believed that the secret of life must reside in chemical composition: animating matter was just a question of getting the right blend of ingredients.

Put this way, we should not be surprised that Friedrich Wöhler’s synthesis of urea (a molecule that hitherto only living creatures could produce) from an ammonium salt in 1828 posed no deep threat to vitalism, despite its being often cited as the beginning of the end for the theory. The vital potential of molecules was all a matter of degree; so there was no real cause for surprise that a molecule associated with living beings could be made from seemingly inanimate matter. In fact, the dawning appreciation during the 19th century that “organic chemistry”—the science of the mostly carbon-based molecules produced by and constituting living things—was contiguous with the rest of chemistry only deepened the puzzle of what life is, while at the same time reinforcing the view that life was a matter for scientists rather than theologians.

The early chemists believed that the secret of life must reside in chemical composition: animating matter was just a question of getting the right blend of ingredients. In 1835 the French anatomist Félix Dujardin claimed to have made the primordial living substance by crushing microscopic animals to a gelatinous pulp. Four years later the Czech physiologist Jan Purkinje gave this primal substance a name: protoplasm, which was thought to be some kind of protein and to be imbued with the ability to move of its own accord.

In the 1860s Charles Darwin’s erstwhile champion Thomas Henry Huxley claimed to have found this primitive substance, which he alleged to be the “physical basis of life,” the “one kind of matter which is common to all living beings.” He identified this substance with a kind of slime in which sea floor–dwelling organisms seemed to be embedded. The slime contained only the elements carbon, hydrogen, oxygen, and nitrogen, Huxley said. (Actually his protoplasm turned out to be the product of a chemical reaction between seawater and the alcohol used to preserve Huxley’s marine specimens.) Meanwhile, the leading German advocate of Darwinism, Ernst Haeckel, declared that there is a kind of vital force in all matter, right down to the level of atoms and molecules; the discovery of molecular organization in liquid crystals in the 1880s seemed to him to vindicate the hypothesis.

Haeckel was at least right to focus on organization. Ever since the German physiologist Theodor Schwann proposed in the mid-19th century that all life is composed of cells, the protoplasm concept was confronted by the need to explain the orderly structure of life: jelly wasn’t enough. Spontaneous generation was finally killed off by the experiments of Louis Pasteur and others showing that sterile mixtures remained that way if they were sealed to prevent access to the microorganisms that Pasteur had identified under the microscope. But vitalism didn’t die in the process, instead mutating into the notion of “organic organization”—the mysterious propensity of living beings to acquire structure and coordination among their component molecular parts, which biologists began to discern when they inspected cells under the microscope. In other words, the organization of life apparent at the visible scale extended not only to the cellular level but beyond. The notion of a universal protoplasm, meanwhile, became untenable once the diversity of life’s molecular components, in particular the range of protein enzymes, became apparent through chemical analysis in the early 20th century.

Primal jelly had a notable swan song. In 1899 the Boston Herald carried the headline “Creation of Life . . . Lower Animals Produced by Chemical Means.” Setting aside the possibly tongue-in-cheek corollary adduced in the headline “Immaculate Conception Explained,” the newspaper described the research of German physiologist Jacques Loeb, who was working at the center for marine biology in Woods Hole, Massachusetts. Loeb had, in fact, done nothing so remarkable; he had shown that an unfertilized sea-urchin egg could be induced to undergo parthenogenesis, dividing and developing, by exposure to certain salts. Loeb’s broader vision, however, set the stage for an interview in 1902 that reports him as saying,

Loeb’s words sound almost like the deranged dream of a Hollywood mad scientist. Scientific American even dubbed Loeb “the Scientific Frankenstein.” Needless to say, Loeb was never able to do anything of the sort; but his framing of controlling life through an engineering perspective proved prescient, and was most prominently put forward in his book The Mechanistic Conception of Life (1912).

On the Circuit

Loeb’s dream of “playing” with life could not be realized until we had a better conception of life’s components. Finding those components was the mission of 20th-century molecular biology, which emerged largely from the studies of the chemical structure and composition of proteins using X-ray crystallography, pioneered from the 1930s to the 1950s by J. Desmond Bernal, William Astbury, Dorothy Hodgkin, Linus Pauling, and others. These molecules looked like tiny machines, designed and shaped by evolution to do their job.

But of course molecular biology wasn’t just about proteins. What really changed the game was the discovery of what seemed to be the source of life’s miraculous organization. It was not, as many had anticipated, a protein that carried the information needed to regulate the cell, but rather a nucleic acid: DNA. When James Watson and Francis Crick used the X-ray crystallographic data of others, including that of Rosalind Franklin, to deduce the double-helical shape of the molecule in 1953, not all scientists believed that DNA was the vehicle of the genes that appeared to pass instructions from one generation to the next. Watson and Crick’s work showed how that information was encoded—in a digital sequence of molecular building blocks along the helix—and moreover implied a mechanism by which the information could be copied during replication.

If these were indeed “instructions for life,” then chemistry could be used to modify them. That was the business of genetic engineering, which took off in the 1970s when scientists figured out how to use natural enzymes to edit and paste portions of “recombinant” DNA. Molecular biologists were now thinking about life as a form of engineering, amenable to design.

Synthetic biology has sometimes been called “genetic engineering that works”: using the same cut-and-paste biotechnological methods but with a sophistication that gets results. That definition is perhaps a little unfair because “old-fashioned” genetic engineering worked perfectly well for some purposes: by inserting a gene for making insulin into bacteria, for example, this compound, vital for treating diabetes, can be made by fermentation of microorganisms instead of having to extract it from cows and pigs. But deeper interventions in the chemical processes of living organisms may demand much more than the addition of a gene or two. Such interventions are what synthetic biology aims to achieve.

Take the production of the antimalarial drug artemisinin, the discovery of which was the subject of the 2015 Nobel Prize in medicine. This molecule offers the best protection currently available against malaria, working effectively when the malaria parasite has developed resistance to most other common antimalarials. Artemisinin is extracted from a shrub cultivated for the purpose, but the process is slow and has been expensive. (Prices have dropped recently.) Over the past decade researchers at the University of California, Berkeley, have been attempting to engineer the artemisinin-making machinery of the plant into yeast cells so that the drug can be made cheaply by fermentation. It’s complicated because the molecule is produced in a multistep process involving several enzymes that have to transform the raw ingredient stage by stage into the complex final molecule, with each step being conducted at the right moment. In effect this means equipping yeast with the genes and regulating processes needed for a whole new metabolic pathway, or sequence of biochemical reactions—an approach called metabolic engineering, amounting to the kind of designed repurposing of an organism that is a core objective of synthetic biology.

Artemisinin synthesis in yeast (more properly, semisynthesis since it begins with a precursor of the drug molecule harvested from natural sources) is often called the poster child of synthetic biology—not just because it works (the process is now entering commercial production) but because it has unambiguously benevolent and valuable aims. Creating useful products, advocates say, is all they are trying to do: not some Frankenstein-style creation of unnatural monstrosities but the efficient production of much-needed drugs and other substances, ideally using biochemical pathways in living organisms as an alternative to the sometimes toxic, solvent-laden processes of industrial chemistry.

Imagine bacteria and yeast engineered to make “green” fuels, such as hydrogen or ethanol, fed by plant matter and negating the need to mine and burn coal and oil. Imagine easily biodegradable plastics produced this way rather than from oil. Craig Venter, who made his name (and money) developing genome-decoding technologies, has made such objectives a central element of the research conducted at his J. Craig Venter Institute (JCVI) in Rockville, Maryland. Last April scientists at JCVI announced that they have devised ways to engineer microalgae called diatoms, using the methods of synthetic biology, so that they join bacteria and yeast as vehicles for making biofuels and other chemicals.

In effect JCVI is trying to create microscopic living factories. The same motive underpinned Venter’s creation of an alleged “synthetic organism” in 2010, another of the milestones of synthetic biology—the Frankenbug, in the words of some opponents of genetic manipulation. Whether those microbes can be considered truly artificial is a matter of debate. The JCVI scientists used well-established chemical methods to build an entire genome from DNA, based on that of a naturally occurring bacterium called Mycoplasma mycoides but with some genetic sequences added and others omitted. They then took cells of a closely related Mycoplasma bacterium, extracted their pristine DNA, inserted the artificial replacements, and “booted up” the modified cells as if they were computers with a new operating system. The cells worked just as well with their new bespoke DNA.

The aim was not some hubristic demonstration of control over life but rather verification that bacterial cells can be fitted with new instructions that might be a stripped-down, simplified version of their natural ones: a kind of minimal chassis on which novel functions can be designed and constructed. The full genetic workings of even the simplest bacteria are not completely understood, but if their genomes can be simplified to remove all functions not essential to sustain life, the task of designing new genetic pathways and processes becomes much easier. This March the JCVI team described such a “minimal” version of Mycoplasma bacterium.

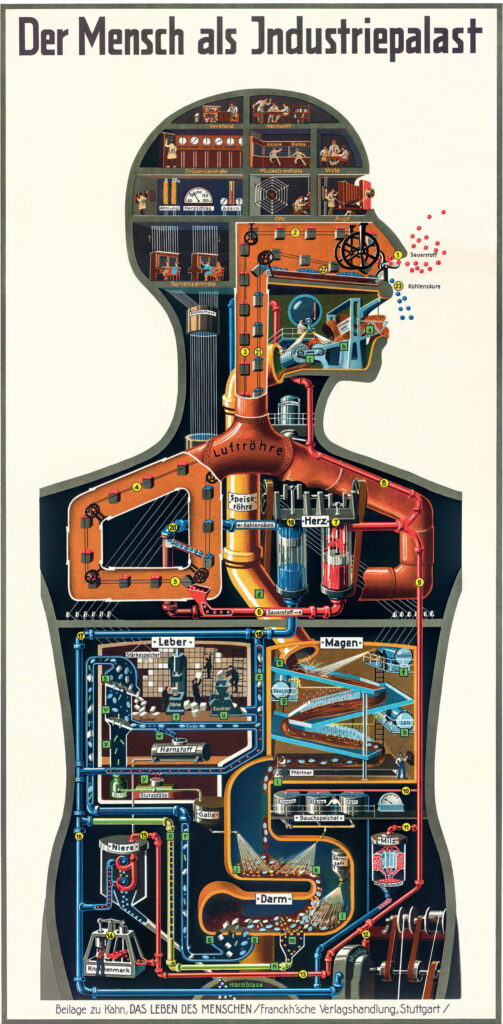

The language of this new science is that of the engineer and designer: the language of the artisan, not of the natural philosopher discovering how nature works. This way of thinking about life goes back at least to René Descartes, who conceived of the body as a machine, a mechanism of levers, pulleys, and pumps. In Descartes’s time this clockwork view of life could lead to nothing more than crude mechanical simulacra: the automata fashioned by watchmakers and inventors, ingenious and uncanny contraptions in themselves but ultimately no more animated than the hands of a clock. But synthetic biology brings the Newtonian, mechanistic philosophy to the very stuff of life, to the genes and enzymes of living cells: they are now the cogs and gears that can be filed, spring-loaded, oiled, and assembled into molecular mechanisms. Then we have no mere simulation of life but life itself.

The language of this new science is that of the engineer and designer: the language of the artisan, not of the natural philosopher discovering how nature works.

Yet the language now is not so much that of clockwork and mechanics but of the modern equivalent: our latest cutting-edge technology, namely electronics and computation. Ever since biologists François Jacob, Jacques Monod, and others showed in the 1960s how genes are regulated to control their activity, genetics has adopted the lexicon of cybernetic systems theory, which was developed to understand how to control complex technological systems and found applications in electronic engineering, robotics, communications, and computation. That is to say, different components in the genome are said to be linked into circuits and regulated by feedback loops and switches as they pass signals from one unit to another.

In this view genomes perform their role of organizing and regulating life in a modular and hierarchical fashion, much as electronic components are connected into basic circuit elements, such as amplifiers or logic devices, which are in turn arranged so as to permit higher-level functions, such as memory storage and retrieval or synchronization of signals.

This notion of genetic circuitry is the conceptual platform on which synthetic biology is being built. In the BioBricks scheme created by researchers at the Massachusetts Institute of Technology (MIT), genes may be combined and compiled into a Registry of Standard Biological Parts: an open-access catalogue of biological gene circuits that you can peruse just as you would a RadioShack catalog of electronic components, looking for the devices you need to realize your design. The aim is that with sufficient attention to standardization these biological parts will work as “plug and play,” without needing lots of refinement and tuning for each application.

This plug-and-play concept works remarkably well. Early triumphs for synthetic biology included the demonstration of gene circuits that acted as oscillators (enabling the rate of synthesis of a protein to be turned periodically up and down) and toggle switches (allowing the controllable turning on and off of protein synthesis using a chemical or light signal). In this way researchers made bacteria that lit up periodically via a fluorescent protein whose production was linked to a genetic oscillator circuit. Since 2004 the MIT team has staged an annual event called the International Genetically Engineered Machine competition, in which student teams vie to present the most innovative synthetic biology project. Entries have included “E. chromi,” genetically modified E. coli that can change to all colors of the rainbow in response to particular signals (such as toxic gases or food contaminants), microbial fuel cells that generate electricity from engineered E. coli, and bacterial photography using E. coli modified to produce chemicals that turn a film black in response to light.

Life as Information

The “systems engineering” orientation of synthetic biology reflects the latest shift in our view of life. In the age of alchemy, life was seen as a sort of vital spirit, an occult force that pervaded nature. Newton and Descartes made it a matter of mechanics; Galvani turned it into an electrical phenomenon, while the burgeoning of chemistry in the 19th century made it a question of chemical composition. But ever since Watson and Crick’s discovery married neo-Darwinian genetics to molecular biology, life has been increasingly regarded as a matter of information and is often described now as a digital code imprinted into the molecular memory that is the genetic sequence of DNA. Redesigning life, like computer engineering, is then a question of coding and circuit design.

Looked at historically, our current view of life seems likely to become as obsolete as the old ones. But perhaps we shouldn’t view that succession as simply a replacement of one idea by another. Some aspects of all the old models can still be defended: a machine metaphor works pretty well, at least for the motor proteins that move objects around in the cell. And the concept of cellular organization now makes more sense in light of contemporary ideas about molecular self-organization. The same will surely be true for the model of life as a form of computation. Yet insistence on a simple equivalence between the computer and the cell is clearly inadequate. The old picture of genes as fundamental units of information translated in linear and unique fashion into proteins that act as biology’s molecular workhorses is far too simple. Genomes are not blueprints for an organism any more than they are “books of life.” Life has a logic that we have yet to discern, and it doesn’t seem to map easily onto any technology currently known. For this reason alone we remain far from any genuinely de novo synthesis of life.

Playing God?

We may be surprised that early attempts to make “synthetic life” aroused so little controversy. But it was the insights of modern biology—of the unity of life at the molecular level—that made “life-making” controversial. Now, intervening in the fundamental processes of any living thing carries implications for us, too. Perhaps less obviously, these implications depend on the secularization of our view of life. Only when humans were no longer privileged beings favored and ensouled by God could the engineering of all living things seem problematic. The sacredness of human life has been generalized to a deification of nature precisely because our age is no longer morally ruled by Christian doctrine. So it’s no surprise that accusations of “playing God” with biotechnology come less from religious circles—there is no theological basis for the concept—than from secular ones.

Yet religion is only one form of myth—and myth more broadly still matters. Writing in 1924 about the “artificial” creation of human life by both IVF and hypothetical gestation outside the uterus, the biologist J. B. S. Haldane declared that

He was right, but scientists tend to forget why. They deplore the tendency to cast scientific advances in mythical terms so that the shadow of Faust and Frankenstein falls over every development in the modification and genesis of life. This frustration is understandable: the “Franken” trope is lazy journalism, an off-the-shelf bit of sensationalism calculated to produce an alluring frisson (along with righteous indignation) in readers. But one of the very functions of myth is to give shape to fears and dreams that we can barely articulate.

Life has a logic that we have yet to discern, and it doesn’t seem to map easily onto any technology currently known. For this reason alone we remain far from any genuinely de novo synthesis of life.

Synthetic biology seems to go to the heart of one of the deepest and oldest of these imaginings: the creation and control of life through techne. That grand goal might seem a long way from making a flashing bacterium. But synthetic biology has a potential that goes much further: not only might this field be transformative in the making of materials, medicines, and more, but it could reshape our conception of what living organisms are, what they can be, and how we might intervene to shape them. We should not find it surprising if such powers reawaken old myths and associations. To navigate the cultural debate we need to be aware of the historical influence of our myths, their buried moral messages, and the preconceptions and premonitions that they invoke. There never was a Faust who bargained with the devil, Frankenstein’s monster was never made, the Brave New World never arrived—but the real reason we are still invoking these images is that they still fit the shapes of our nightmares.