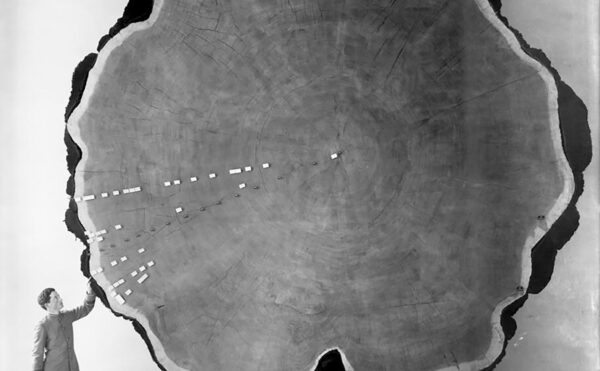

The story begins in a kitchen. Two young women in bonnets sit by shelves of crockery and an open window. Warm, yellow light streams in, and we see just a hint of blue sky. Inside, the colors are browns, sepias, and ochres. The first woman’s dress: brown. The shadows on her dress: darker brown. The table by the wall and the copper pots above: light brown, medium brown, and dark brown.

If art historians and conservators are right about Interior of a Kitchen, Martin Drölling’s painting from 1815, the artist had help from a surprising source—the grave. Scholars believe he relied heavily on a popular pigment of his time—mummy brown—a concoction made from ground-up Egyptian mummies. From the 16th to the 19th century many painters favored the pigment, and it remained available into the 20th century, even as supplies dwindled. In 1915 a London pigment dealer commented that one mummy would produce enough pigment to last him and his customers 20 years.

Nineteenth-century painters Eugène Delacroix, Sir Lawrence Alma-Tadema, and Edward Burne-Jones were just a few of the artists who found the pigment useful for shading, shadows, and, ironically, flesh tones. (On discovering the source of the pigment, Burne-Jones is said to have been horrified and felt compelled to bury his reserves of mummy brown.)

But it wasn’t just artists who were using ground-up bodies. Since the 12th century, Europeans had been eating Egyptian mummies as medicine. In later centuries unmummified corpses were passed off as mummy medicine, and eventually some Europeans no longer cared whether the bodies they were ingesting had been mummified or not. These practices, however strange, are just some of the many ways people have made something useful out of death.

A Gross Misunderstanding

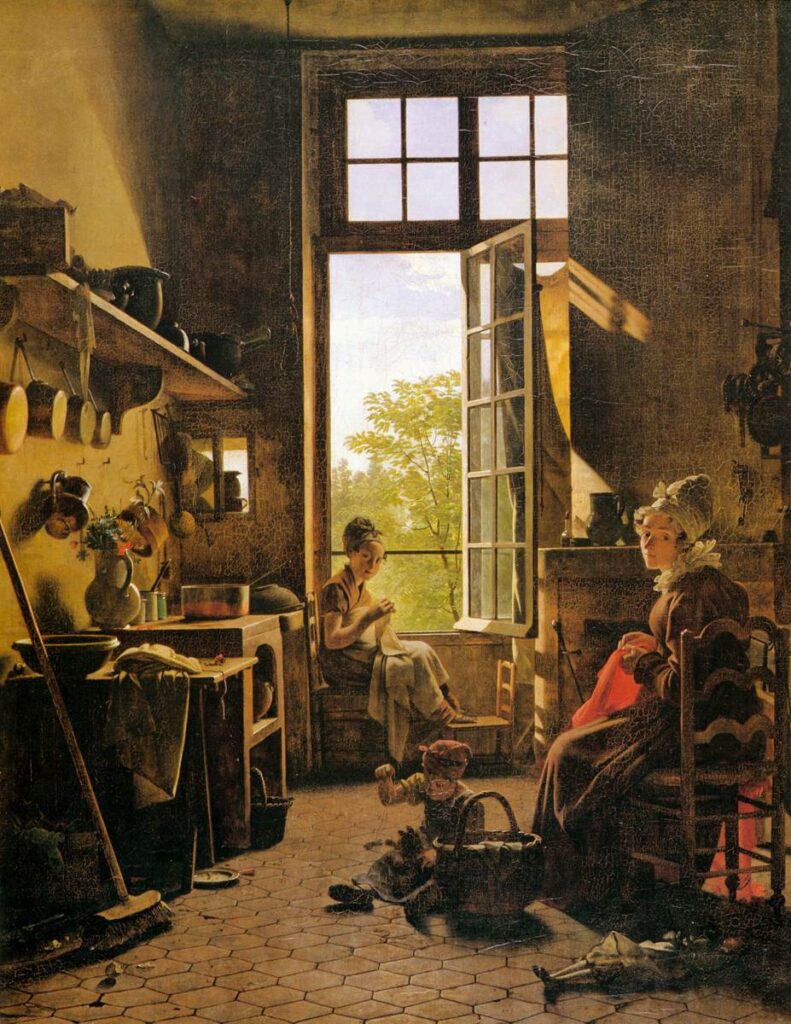

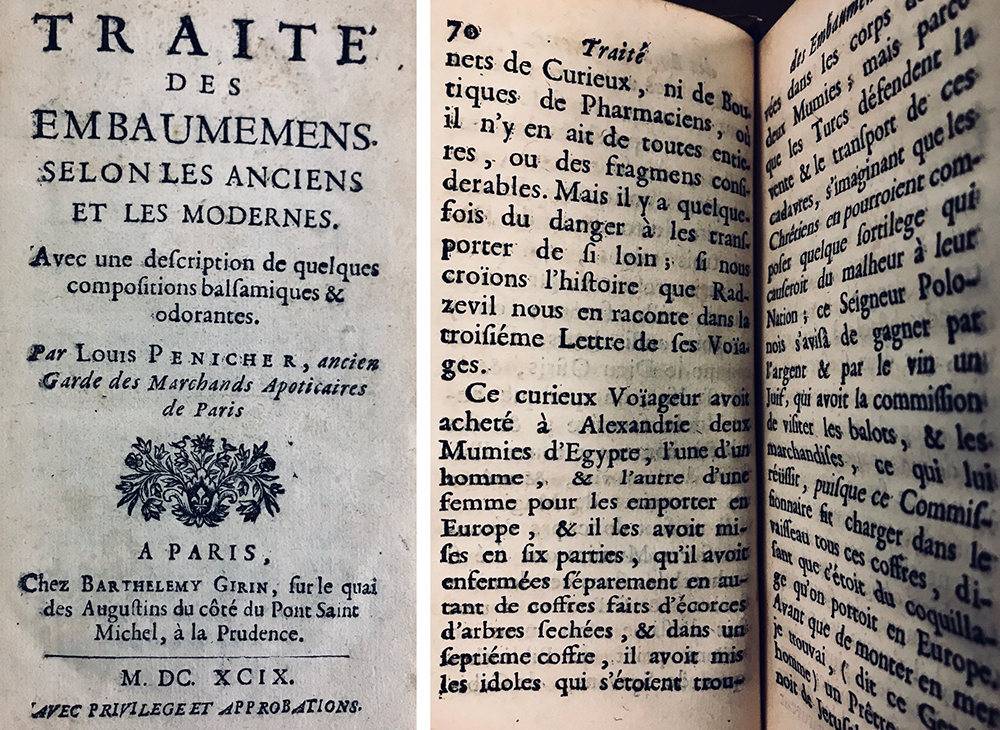

The eating of Egyptian mummies reached its peak in Europe by the 16th century. Mummies could be found on apothecary shelves in the form of bodies broken into pieces or ground into powder. Why did Europeans believe in the medicinal value of the mummy? The answer probably comes down to a string of misunderstandings.

Today we think of bitumen as asphalt, the black, sticky substance that coats our roads. It’s a naturally occurring hydrocarbon that has been used in construction in the Middle East since ancient times. (The book of Genesis lists it as one of the materials used in the Tower of Babel.) The ancients also used bitumen to protect tree trunks and roots from insects and to treat an array of human ailments. It is viscous when heated but hardens when dried, making it useful for stabilizing broken bones and creating poultices for rashes. In his 1st-century text Natural History, Roman naturalist Pliny the Elder recommends ingesting bitumen with wine to cure chronic coughs and dysentery or to combine it with vinegar to dissolve and remove clotted blood. Other uses included the treatment of cataracts, toothaches, and skin diseases.

Natural bitumen was abundant in the ancient Middle East, where it formed in geological basins from the remains of tiny plants and animals. It had a variety of consistencies, from semiliquid (known today as pissasphalt) to semisolid (bitumen). In his 1st-century pharmacopoeia, Materia Medica, the Greek physician Dioscorides wrote that bitumen from the Dead Sea was the best for medicine. Later scientists would learn that bitumen also has antimicrobial and biocidal properties and that the bitumen from the Dead Sea contains sulfur, also a biocidal agent.

While different cultures had their own names for bitumen—it was esir in Sumeria and sayali in Iraq—the 10th-century Persian physician Rhazes made the earliest known use of the word mumia for the substance, after mum, which means wax, referring to its stickiness. By the 11th century the Persian physician Avicenna used the word mumia to refer specifically to medicinal bitumen. We now call the embalmed ancient Egyptian dead “mummies” because when Europeans first saw the black stuff coating these ancient remains, they assumed it to be this valuable bitumen, or mumia. The word mumia became double in meaning, referring both to the bitumen that flowed from nature and to the dark substance found on these ancient Egyptians (which may or may not have actually been bitumen).

As supplies of bitumen became increasingly scarce, perhaps partially because of its wonder-drug reputation, these embalmed cadavers presented a potential new source. So what if it had to be scraped from the surface of ancient bodies?

The meaning of mumia shifted in a big way in the 12th century when Gerard of Cremona, a translator of Arabic-language manuscripts, defined the word as “the substance found in the land where bodies are buried with aloes by which the liquid of the dead, mixed with the aloes, is transformed and is similar to marine pitch.” After this point the meaning of mumia expanded to include not just asphalt and other hardened, resinous material from an embalmed body but the flesh of that embalmed body as well.

Take Two Drops of Mummy and Call Me in the Morning

Eating mummies for their reserves of medicinal bitumen may seem extreme, but this behavior still has a hint of rationality. As with a game of telephone, where meaning changes with each transference, people eventually came to believe that the mummies themselves (not the sticky stuff used to embalm them) possessed the power to heal. Scholars long debated whether bitumen was an actual ingredient in the Egyptian embalming process. For a long time they believed that what looked like bitumen slathered on mummies was actually resin, moistened and blackened with age. More recent studies have shown that bitumen was used at some point but not on the royal mummies many early modern Europeans might have thought they were ingesting. Ironically, Westerners may have believed themselves to be reaping medicinal benefits by eating Egyptian royalty, but any such healing power came from the remains of commoners, not long-dead pharaohs.

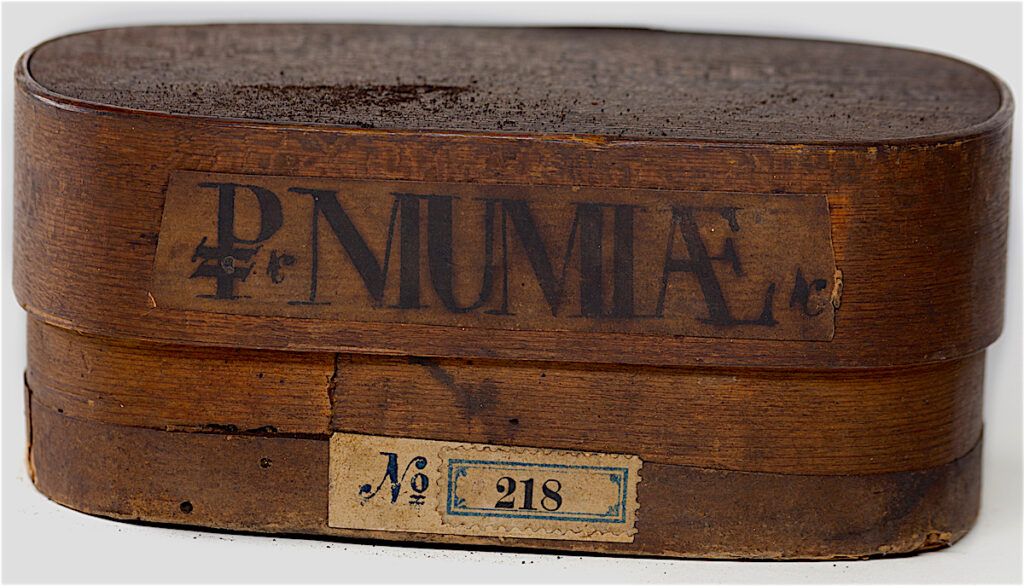

Even today the word mummy conjures images of King Tut and other carefully prepared pharaohs. But Egypt’s first mummies weren’t necessarily royalty, and they were preserved by accident by the dry sands in which they were buried more than 5,000 years ago. Egyptians then spent thousands of years trying to replicate nature’s work. By the early Fourth Dynasty, around 2600 BCE, Egyptians began experimenting with embalming techniques, and the process continued to evolve over the centuries. The earliest detailed accounts of embalming materials didn’t appear until Herodotus listed myrrh, cassia, cedar oil, gum, aromatic spices, and natron in the 5th century BCE. By the 1st century BCE, Diodorus Siculus had added cinnamon and Dead Sea bitumen to the list.

Eating mummies for their reserves of medicinal bitumen may seem extreme, but this behavior still has a hint of rationality.

Research published in 2012 by British chemical archaeologist Stephen Buckley shows that bitumen didn’t appear as an embalming ingredient until after 1000 BCE, when it was used as a cheaper substitute for more expensive resins. This is the period when mummification hit the mainstream.

Bitumen was useful for embalming for the same reasons it was valuable for medicine. It protected a cadaver’s flesh from moisture, insects, bacteria, and fungi, and its antimicrobial properties helped prevent decay. Some scholars have suggested that there was also a symbolic use for bitumen in mummification: its black color was associated with the Egyptian god Osiris, a symbol of fertility and rebirth.

Although in the early days intentional mummification was reserved for pharaohs, the process gradually became democratized, first for nobility and other people of means. By the Ptolemaic (332 to 30 BCE) and Roman (30 BCE to 390 CE) periods, mummification had become affordable for even the budget-minded populace. Many of the Romans and Greeks living in Egypt at this time also underwent mummification, though they were motivated more by prestige than by a rewarding afterlife. Mummification became a status symbol: a jewel-encrusted mummiform was the ancient version of driving a new Audi (although much less fun for the user). Because these expats weren’t necessarily concerned with preserving their bodies for the long run, many of the Roman mummies discovered from this period are elaborate on the outside—some have been found covered in gold and diamonds—but collapsing within the wrappings. These mummies also made use of bitumen, resin’s affordable substitute.

These more recent, cheaper-made mummies were the ones that found their way to Europe; they were not the pharaohs some presumed them to be. Ambrose Paré, a 16th-century barber surgeon who opposed the use of mummy as a drug, claimed, “This wicked kind of drugge doth nothing help the diseased.” Paré noted that Europeans were most likely getting “the basest people of Egypt.” The results, though, would have looked the same; not until the 20th century could scientists tell which mummies were embalmed with bitumen.

What was the attraction of mummy medicine in early modern Europe? Likely the exoticism of mummies, at least in part. Europeans began exploring Egypt in the 13th century, and interest continued for hundreds of years (and still continues). In 1586 English merchant John Sanderson smuggled 600 pounds of mummy parts from an Egyptian tomb:

But as mummy medicine became popular in the West, merchants found new ways to satisfy demand. Tomé Pires, a 16th-century Portuguese apothecary traveling in Egypt, wrote that merchants “sometimes pass off toasted camel flesh for human flesh.” Guy de la Fontaine, a doctor to the king of Navarre, on a visit to Egypt in 1564 asked an Alexandrian merchant about ancient embalming and burial practices. The merchant laughed and said he had made the mummies he was selling. Karl H. Dannenfeldt, author of an influential 1985 article on the subject, “Egyptian Mumia: The Sixteenth Century Experience and Debate,” describes Fontaine’s scene: “The bodies, now mumia, had been those of slaves and other dead persons, young and old, male and female, which he had indiscriminately collected. The merchant cared not what diseases had caused the deaths since when embalmed no one could tell the difference.”

A Local Remedy

As authentic Egyptian mummies begat fraudulent ones, a medicinal shift occurred in Europe that made this trickery less necessary: there was a growing fondness for medicines made of often local, recently dead human flesh, bone, secretions, and even excretions. Scholars now refer to human-derived drugs generally as corpse medicine, which in early modern Europe included blood, powdered skull, fat, menstrual blood, placenta, earwax, brain, urine, and even feces. Richard Sugg, author of Mummies, Cannibals and Vampires: The History of Corpse Medicine from the Renaissance to the Victorians, writes, “For certain practitioners and patients there was almost nothing between the head and feet which could not be used in some way.”

An epileptic (or more likely his doctor) seeking a remedy for seizures in 1643 might turn to Oswald Croll’s Basilica chymica, where, on page 101, can be found a recipe for an “Antepileptick Confection of Paracelsus.” The recipe’s central ingredient consists of the unburied skulls of three men who died a violent death. Another recipe for “Treacle of Mumy” has been quoted often, and for good reason. Croll writes, “Of Mumy only is made the most excellent Remedy against all kinds of Venomes.” He explains:

A footnote explains that Croll’s mumy is “not that Liquid matter which is found in the Egyptian Sepulchers. . . . But according to Paracelsus it is the flesh of a Man, that perishes by a violent death, and kept for some time in the Air.” That is, Croll defines mummy as the flesh of almost any corpse one could find.

What compelled people to believe this form of (medicinal) cannibalism was both useful and acceptable at a time when reports of cannibalism in the New World shocked and horrified Europeans?

Medical historian Mary Fissell reminds us that common understandings of medicinal usefulness were once quite different. Medicine that produced a physiological effect—whether purging or excreting—was considered successful. It certainly makes sense that eating human remains might induce vomiting. Fissell also points out that many of the hormonal treatments developed in the 20th century were made from animals or their by-products. “They had to boil down a hell of a lot of mare’s urine to get that early hormone,” she says, “so we’re not even as far away [in terms of what we deem acceptable or gross today] as we might think.” Premarin, an estrogen-replacement medication derived from mares’ urine, is still widely used today. Bert Hansen, also a medical historian, points out that many medicines were selected through a process of trial and error. “A lot of medical treatment was only one step away from cooking.” He adds that people were “willing to taste and eat things that we now find disgusting” and that for a “middle-class household with no running water and no refrigeration . . . hands, bodies, everything is somewhat smelly and icky all the time. That’s life.”

Not all early doctors or apothecaries advocated the use of corpse medicine. Aloysius Mundella, a 16th-century philosopher and physician, derided it as “abominable and detestable.” Leonhard Fuchs, a 16th-century herbalist, accepted foreign mummy medicine but rejected the local substitution. He asked, “Who, unless he approves of cannibalism, would not loathe this remedy?” Mummy medicine devotees, including English King Charles II, seemed able to work around this uneasiness by differentiating between food and medicine. (Charles reputedly carried around his own homemade tincture of human skull, which was given the nickname the King’s Drops). In her book Medicinal Cannibalism in Early Modern English Literature and Culture, Louise Noble suggests that people were able to distance the final medicinal product from its original source—the human body—by convincing themselves it had somehow transformed into something new. For his part Sugg describes a perceived division between food and medicine when he quotes the religious writer and historian Thomas Fuller, who described mummy medicine as “good Physic [medicine], but bad food.”

Another powerful factor in people’s thinking about corpse medicine was just that—its perceived power. Egyptian mummies were linked to ancient knowledge and wisdom. Many scholars have noted parallels to the Catholic Eucharist, wherein Christ’s own flesh, through the belief in transubstantiation, is ingested for spiritual well-being. With the flesh comes what Noble describes as “the awesome potency of God,” perhaps the strongest corpse medicine of all. Noble suggests that the Catholic belief in the Eucharist led to an acceptance of human corpse medicine. She writes, “One is administered to treat the disease of the body and the other the disease of the soul. . . . Both reflect the belief that the essence of a past life has pharmacological power when absorbed into a life in the present.” As for Protestant mummy-medicine partakers, Noble proposes that eating mummy was a way of getting some power from flesh without having to participate in the Catholic Eucharist.

Noble compares the Christian beliefs in the sacred power of human flesh with the beliefs of Paracelsus, the Renaissance physician, botanist, and alchemist who saw an innate power in the human body. Behind this corpse pharmacology, Noble writes, “is the perception that the human body contains a mysterious healing power that is transmitted in ingested matter such as mummy.” Of this power Paracelsus wrote, “What upon the earth is there of which its nature and power are not found within the human being? . . . For all these great and wondrous things are in the human being: all the powers of the herbs [and] trees are found in the mumia.” Paracelsus’s mumia was not the foreign kind but the healing power of the human body, which he believed could be transferred from person to person.

Though these ideas may seem strange to 21st-century readers, Noble theorizes that the idea of transferring life force is not so different from how some perceive organ donation. Actor Liam Neeson spoke proudly about his deceased wife, Natasha Richardson, who had donated her organs. He told CNN’s Anderson Cooper, “We donated three of her organs, so she’s keeping three people alive at the moment . . . her heart, her kidneys, and her liver. It’s terrific and I think she would be very thrilled and pleased by that.” This somewhat spiritual and partly practical idea that a dead person can give life to another also hints at a sort of immortality for the dead, who get to live on in someone else.

The Father of Utility Gives Dissection a Good Name

Where did all of these early modern, corpse-medicine cadavers come from? Some resulted from accidental deaths, but many others were executed criminals. Great Britain’s Murder Act of 1752 allowed executed murderers to be dissected. The reasoning was twofold: first, it denied murderers a proper burial, thereby inflicting extra punishment beyond death (the language of the act actually claimed it as “further terror and a peculiar mark of infamy”); and second, it provided bodies for anatomical research and medical education. After dissection the bodies often went to apothecaries to be made into medicine.

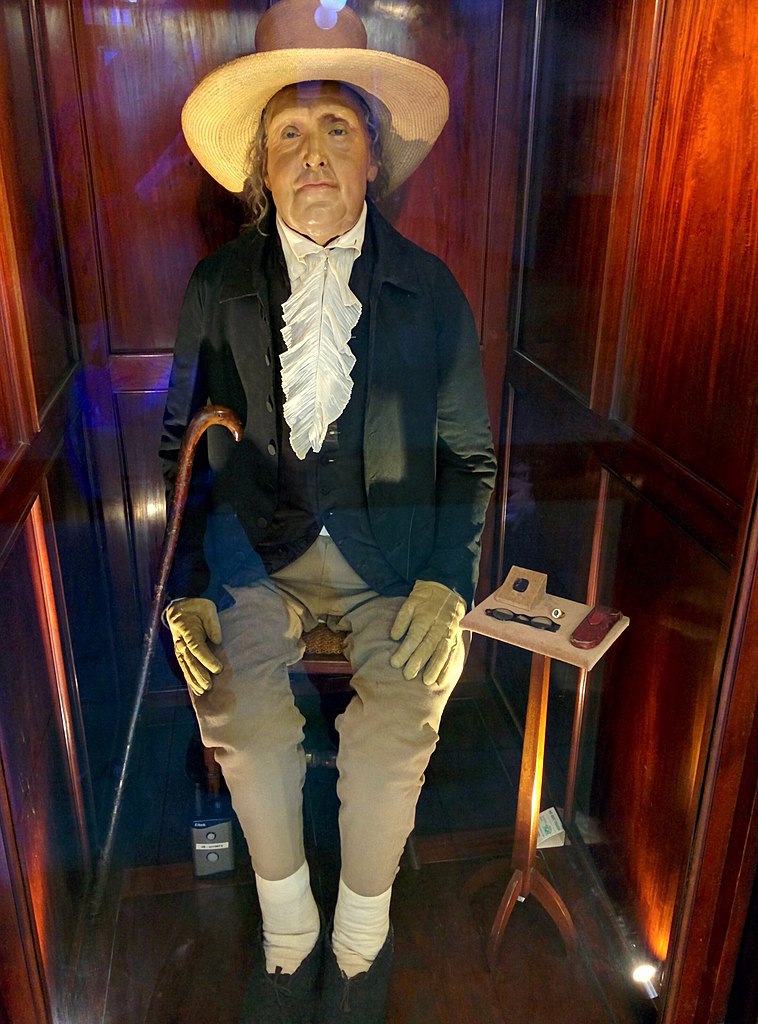

As much as the sick may have appreciated using other people’s bodies as medicine, surely no one would actually want to end up being one of these bodies. Why would anyone volunteer to undergo an act reserved for the worst criminals? Jeremy Bentham, philosopher and father of Utilitarianism, took steps to change the stigma and fear that most people reserved for dissection when, in the spirit of usefulness, he became the first person to donate his body to science in 1832.

In 1824 Bentham’s friend and colleague Thomas Southwood Smith published “Use of the Dead to the Living.” The pamphlet detailed the difficulties medical schools faced in obtaining bodies for their students to dissect. Without a reliable supply of cadavers, Smith wrote, public hospitals would be made into “so many schools where the surgeon by practicing on the poor would learn to operate on the rich.” Bentham had already decided to donate his body, but Smith’s pamphlet also compelled him to draft legislation that inspired the Anatomy Act of 1832, which permitted anyone with legal custody of a dead body to donate it for dissection. Bentham turned his body over to the custody of Smith and instructed him, on Bentham’s death, to dissect it publicly, and then to preserve the head and stuff the body in as lifelike a manner as possible.

As much as the sick may have appreciated using other people’s bodies as medicine, surely no one would actually want to end up being one of these bodies.

Smith failed in his mummification of Bentham’s head: the result was unrecognizable. He wrote, “I endeavored to preserve the head untouched, merely drawing away the fluids by placing it under an air pump over sulphuric acid. By this means the head was rendered as hard as the skulls of the New Zealanders; but all expression was of course gone.” To carry out Bentham’s wishes Smith asked anatomical modeler Jacques Talrich to create a lifelike wax model. The body was stuffed, the wax head attached, and Bentham’s own clothing and hat placed on the body. He now sits in a glass and mahogany case on public view at University College London, where the dissection took place. The mummified head is stored nearby in a climate-controlled room in the college’s archaeology division.

While many today donate their bodies to science out of a sense of utility or goodwill, just as Jeremy Bentham had hoped, not everyone thought so highly of the Anatomy Act. Drafted to encourage body donations, the bill became deeply feared and despised, gaining nicknames like the “Dead Body Bill” and the “Blood-Stained Anatomy Act.” The act forced people without the means for burials into a fate formerly reserved for murderers: the anatomy classroom. Originally a supporter of the bill, Smith had already begun to worry about shifting the burden of body donation from convicted criminals to the dying poor before the act had even passed. In 1829 he said the poor

While no one today is scouting poorhouses looking for dissection targets, money is still a factor in most life decisions, including that of death.

What Was Old Is New

Death in the Western world has changed. An array of posthumous options exists like never before, and the choices beyond coffins and urns are staggering in scope. You or your loved one could become a diamond, a painting, a stained-glass window, a vinyl record, an hourglass, an (artificial) coral reef, or a tattoo. And at least two companies will take your cremated remains and launch them into space; while expensive, this option is still $2,000 cheaper than the average cost of a funeral.

As the median funeral price in the United States has increased (it grew 36% between 2000 and 2012, going from $5,180 to $7,045), more Americans have opted for cremation (43% in 2012 compared with about 26% in 2000), which cost about $1,650 in 2012. The Cremation Association of North America lists cost as the number-one reason people opt for cremation over a typical burial. The organization also cites the range of creative possibilities cremation provides (such as turning ashes and bones into diamonds or tattoos), concerns over environmental impact, increasing geographic mobility (as people move around more, they are less inclined to visit a cemetery), and a decrease in religiousness as contributing factors.

Cost is just one reason; some people are also looking to make something of death, whether it’s becoming a unique piece of jewelry or contributing their bodies to science. Body donation has grown in popularity and gained an altruistic status that Bentham would have appreciated. A 2010 study conducted at Radboud University in Nijmegen, Netherlands, on why people donate their bodies to science concluded that the primary reason was a desire to be useful. Even during the most recent economic recession only 8% of participants were motivated by money. Still, the Pennsylvania Humanity Gifts Registry—the office that oversees all body donations to the state’s medical schools—reported that donations increased in 2009 (about 700 compared with 600 the year before) after the economy nosedived. Funerals cost money; body donation is essentially free.

Most body donors have no way of knowing how their bodies will be used. When Alan Billis signed up to donate his body to science just weeks after being diagnosed with terminal lung cancer in 2008, he was in the rare position of knowing exactly what would happen. The 61-year-old taxi driver from Torquay, England, was the only person to respond to an advertisement seeking a volunteer to be mummified using ancient Egyptian techniques. He became the star of the 2011 BBC Channel 4 documentary Mummifying Alan: Egypt’s Last Secret.

The project was born after a team of British scientists realized that only by actually embalming a human body could they know if their theories about mummification techniques during Egypt’s Eighteenth Dynasty were correct. To prepare for the project archaeological chemist Stephen Buckley, mentioned earlier in the story, practiced on pigs. “I took it very seriously,” Buckley says. “If I was going to do this experiment on humans, I needed to be as sure as I could be what was going to happen.”

Billis died in January 2011; it took Buckley and his team until August of that year to complete the mummification process. Buckley thinks mummification came closest to perfection during the Eighteenth Dynasty, which lasted from about 1550 to 1292 BCE. This was the period when Tutankhamun briefly reigned. In fact, during the two years that Billis awaited his fate, he cheerfully took to calling himself “Tutan-Alan.” There was a range of embalming techniques and pricing scales available during the Eighteenth Dynasty, but Buckley’s team mummified Billis as the pharaohs of that time would have been embalmed. “The best of the best,” Buckley says.

Buckley and his team first removed Billis’s organs through the abdomen, leaving his heart and brain. The ancient Egyptians thought the heart was what provided intelligence—and was therefore necessary for the afterlife. As for the brain, research has shown that some of Egypt’s best-preserved mummies still held onto theirs, providing a good case to leave Billis’s in place. The team then sterilized the body cavity, substituting an alcohol mixture for palm wine, the original ingredient. Then they lined the cavity with linen bags filled with spices, myrrh, and sawdust and sewed the abdomen closed, sealing it with beeswax. The first four steps were commonly accepted as part of the embalming process, but the fifth step was controversial.

Buckley wanted to prove, however counterintuitive it might seem, that Eighteenth Dynasty embalmers soaked the body in a solution made up of natron, a naturally occurring compound of soda ash and baking soda, and a lot of water. Natron was harvested from ancient Egypt’s dry saline lake beds and was used as both a personal and household cleaning agent. It also was employed as a meat preservative, which was essentially its role in mummification. For Billis, Buckley combined sodium carbonate, sodium bicarbonate, sodium chloride, and sodium sulfate. The natron stopped bacterial growth by raising the pH levels and inhibiting enzymes and bacteria from functioning. The water helped retain the body’s form, preserving a lifelike appearance.

Before Buckley’s experiment the accepted theory was that mummification employed dry natron, resins, oils, and herbs. Remember, Egypt’s original mummies were preserved just by the desert’s dry, salty sands. If intentional mummification was desperately trying to keep moisture out, why would embalmers ever want to let water in? In the early 20th century Alfred Lucas, a forensic chemist and Egyptologist, surmised that mummies might have been soaked in a natron solution, but he lacked the technology to prove it. By the beginning of the 21st century Buckley had what he called “a lightbulb moment” when he examined X-rays of mummies from the Eighteenth Dynasty and discovered salt crystals in the soft tissue. He deduced that water must have helped the crystals permeate the body so deeply. Buckley also thinks the water was symbolic. “The natron solution was about being reborn,” he says. During the early Eighteenth Dynasty, Egypt itself was being reborn as the pharaohs attempted to reassert Egyptian identity after the expulsion of foreign rulers. “Egyptologists come in two schools,” says Buckley. “They either see [mummification] as practical only, or they study rituals through texts, and the two groups never get together. But Egyptian mummification was both practical and symbolic.” Buckley kept this in mind while mummifying Billis, allowing the body time to “lie in state” before beginning the mummification process, giving family time to grieve.

Mummifying Billis helped prove Buckley’s theory but also produced an unexpected result. Since the documentary aired, more than a dozen people have inquired about donating their bodies for further mummification study.

Buckley and his team were the first to carry out ancient Egyptian embalming techniques on a 21st-century human, but others have offered a contemporary version of mummification for years. Salt Lake City, Utah, is home to Summum, a religious nonprofit organization and licensed funeral home. Claude Nowell, who later would go by the name Corky Ra, founded the religion in 1975 after he claimed to be visited by what he described as advanced beings. The encounter helped form the tenets of Summum, which draws some inspiration from ancient Egyptian beliefs. Summum promotes mummification and offers its services to believers and nonbelievers for around $67,000.

The group has gotten its fair share of media attention over the years. They are, after all, the only modern mummification facility on the planet, according to Ron Temu, Summum’s mummification expert. The pyramid-shaped headquarters on the edge of Salt Lake City only adds to the intrigue. Summum’s biggest moment of unsought fame came shortly after the death of Michael Jackson in 2009, when a private unmarked helicopter landed near the group’s home. “We did not mummify Michael Jackson,” Temu says, in a tone that suggests he has uttered those words before. Perhaps several times.

We want to keep the body as natural as possible, do as little damage as possible, so people’s souls can go to the next world.

Temu was a conventional funeral director for many years before he joined the group. The first human he mummified was his friend and colleague Corky Ra, who died in 2008. Like Jeremy Bentham in London, Corky Ra remains a presence at Summum in mummified form, “joining in” on Summum activities and standing by as Temu works on other people and animals. Corky Ra’s body now rests inside a golden mummiform decorated with a very realistic depiction of his face.

Summum’s process is loosely based on Egyptian techniques, though details remain secret. What Temu would reveal is that like the technique used by Buckley’s team, the cadaver is immersed in a solution. But Summum soaks the bodies for up to six months and uses an embalming solution it claims better preserves DNA. Temu says that where the Egyptians were dehydrating the body, Summum aims to seal moisture in. “We want to keep the body as natural as possible, do as little damage as possible,” he says, “so people’s souls can go to the next world.” One of Temu’s proudest achievements is the ability to perfectly preserve people’s eyeballs. “That’s a big tell-tale in mortuary chemistry; after you die your eyes go soft very quickly because your eyeball is close to 100% water.” Summum’s eyeball technique is a closely kept secret.

Many inquire about mummification at Summum despite the steep price. Several have asked if Summum can help them preserve genetic material for future reanimation. But that, Temu says, is not what Summum is about. “Genetic material is preserved, so you could clone the person, but it’s certainly not the goal.”

Many of those interested in Summum’s mummification process are not Summum followers, nor are they—like Alan Billis—donating themselves in a Benthamesque endeavor to be useful. Rather, they seem to find relief in knowing that they, family members, and pets (Summum mummifies many pets) will remain on Earth, in body if not in soul, for the foreseeable future. Are they striving for immortality? Or are they providing some comfort to the still living? Maybe deciding what happens to your body after death is a way of asserting one last bit of control and perhaps putting it to one final bit of use.