Chemistry rarely figures in histories of 20th-century science and technology. Standard accounts manifest a remarkable consensus about what is important, one in which we all appear to know what the key sciences and technologies of the last century have been. Chemistry forms at best a very minor part in these standard stories. Instead histories of science center on particle physics, the atomic bomb, eugenics, molecular biology, and computing. Histories of technology deal mainly with automobiles, aviation, the bomb (again), space rockets, computers, and the Internet. This is something of an exaggeration, but not as much as one might think. Leaving the atomic bomb or the computer out of a course on 20th-century science raises eyebrows; ignoring chemistry barely elicits a response.

Putting chemistry back into those standard stories is not as simple as it sounds. First, it requires a new basis for the writing of histories of science and technology; second, it would force us to change many of the standard historical arguments that shape our account of an extraordinary century.

Fashion is part of the reason that chemistry is left out. Our historical picture is shaped by what was said to be important in the past as well as in the present. Today we want to know the prehistory of biotechnology; yesterday we wanted to know that of the atomic bomb. Chemistry has, by comparison, not been quite so significant in the public imagination this century.

A true history of invention would be a history primarily of failures, for most inventions, even patented ones, are not exploited.

Yet other factors are at play; histories of science often seek to find the origins of present science. The most recent chemistry that figures regularly in the courses and the textbooks of the historians is that of the late 19th century. Along with electricity, it was chemistry—specifically synthetic organic chemistry—that was central to the so-called second industrial revolution. The rise of research in the chemical industry—rather than the academy—is the center of attention for historians. And so it should be.

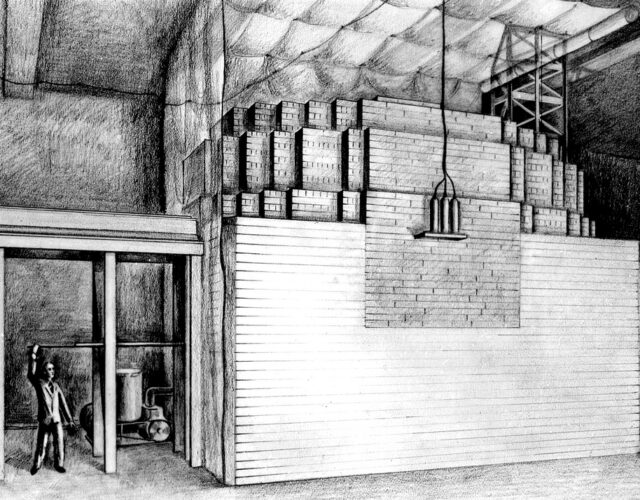

But the fact that this new wave of research is the last routine reference helps us understand that the standard histories of science and technology, whether old ones or the ones implicit in up-to-date courses, are not what they appear to be. They are studies of the early history of some subsequently significant sciences and technologies, of novelties when new. We are left with a problem, for we have neither a history of invention and innovation nor a history of sciences and technologies in use. A true history of invention would be a history primarily of failures, for most inventions, even patented ones, are not exploited. The technologies that are in use at any particular time will have had their origins in such a mixture of ages that one cannot sensibly distinguish old and new. The early-20th-century world, even in developed countries, used horses as well as motorcars, kerosene as well as electricity, wood as well as steel.

Putting chemistry on the agenda requires a proper history of invention and of technologies in use. On the invention side, chemistry has been an area of extraordinary productivity up to and throughout the 20th century. The relative lack of attention given to the history of industrial research means that the full scope of this inventive activity has not been fully recognized. Equally important is that we don’t have a fully rounded history of academic science, so the enormous weight of chemical research in the history of the university is not evident. To argue that the Manhattan Project inaugurated an era of “big science”—as many of those trained in the physics-oriented history of science of the recent past have done—is to ignore the huge, long-term R&D projects of the chemical industry that long predated it. Perhaps the best examples, and ones that will be revisited later in this article, are processes for hydrogenating various substances: for example, converting coal into gasoline. These projects took years of research on a very large scale, which continues to the present day.

The early-20th-century world, even in developed countries, used horses as well as motorcars, kerosene as well as electricity, wood as well as steel.

At the level of studies of use and historical significance—which are related but not the same—chemistry has many claims to be extremely well represented. Take, for example, World War II, which is usually discussed in terms of atomic bombs and V-2 rockets; these contributions are summed up in the phrase “the physicists’ war.” As many historians from Thomas P. Hughes to, most recently, Pap A. Ndiaye have pointed out, chemistry, chemists, and chemical corporations were central to the bomb project. Yet there is much more to be said. It is not at all obvious that the atomic bomb made a positive contribution to the war; in fact the bomb became a very expensive way to destroy two Japanese cities that could easily have been destroyed by a couple more large conventional air raids. The V-2 was without question a major setback to the German war economy: its production killed more people in the manufacturing plant than its deployment as a weapon killed in the field.

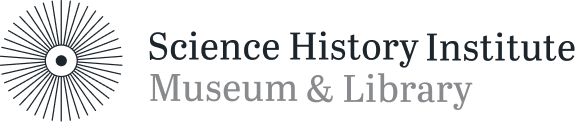

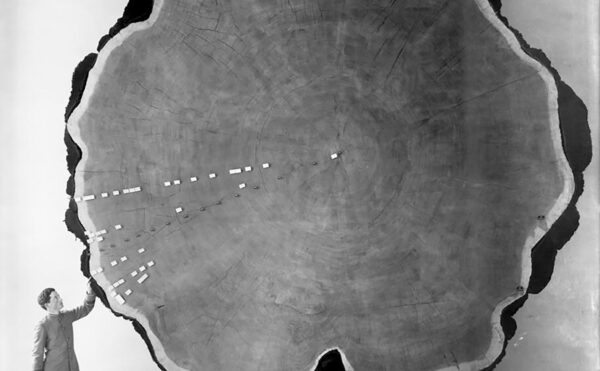

A largely forgotten achievement, on the contrary, was the great coal hydrogenation works that kept Germany fighting in that war. Without various gasoline-from-coal technologies Germany could not have fought World War II as it did. For the Nazis, self-sufficiency in fuel was a key objective, with the establishment of synthetic oil production a central element of the four-year plan of 1936 and with the appointment of Hermann Göring as “fuel commissar.” I. G. Farben’s hydrogenation process was selected as the primXary model, and I. G. Farben built and ran many plants, including one for the new coal-based chemical complex at Auschwitz that would be severely bombed toward the end of the war. The process involved high-pressure hydrogenation with a catalyst under conditions that could be adjusted to produce a particular product—for example, aviation gasoline. The process combined, as it were, the making of a crude and the refining of it. The process was pioneered by Friedrich Bergius, who received the Nobel Prize in Chemistry for his work in 1931. As ever, there were alternatives, including the Fischer-Tropsch process, which involved the hydrogenation of carbon monoxide rather than coal. By 1944 synthetic fuel production was up to 3 million tons, or 25.5 million barrels per year. But the basic processes predated World War I.

Although the technology of coal hydrogenation was taken to many countries, it never became a global enterprise. By the early 1920s the key patents were controlled by I. G. Farben in Germany, but a decade later the international rights were controlled jointly by I. G. Farben, the American oil company Standard Oil, the Anglo-Dutch oil company Royal Dutch Shell, and the British chemical combine Imperial Chemical Industries (ICI). ICI first built a plant in Billingham, England, where it produced gasoline from 1935 to 1958, and added a second one at Heysham in Lancashire during the war. On its defeat Germany was banned from hydrogenating coal; in 1949 its unused plants were ordered to be dismantled. The Soviet Union took four of these plants to Siberia. In Soviet-controlled East Germany, isolated from Western oil markets, coal continued to be hydrogenated until the 1960s. Spain meanwhile developed a synthetic fuel program at Puertollano following a 1944 deal between the pro-Axis Spanish government and Germany. In 1950 Spain’s government signed new deals for technology with BASF and others; production started in 1956 and lasted until 1966.

Another case was coal-rich South Africa, where in 1955 the Sasol company started producing gasoline using the Fischer-Tropsch process. Following the Arab oil embargo of 1973, Sasol II was built; the cutoff of supplies from Iran after the Iranian revolution of 1979 led to the building of Sasol III. Like the German plants, the Sasol complex was bombed, in this case not by the United Nations but by Umkhonto we Sizwe (Spear of the Nation), the armed wing of the African National Congress, in June 1980. The attack marked an important point in the development of a guerrilla war against the Apartheid regime. Racist South Africa, run by its National Party, produced 150,000 barrels of gasoline per day, twice the level of synthetic fuel production achieved in Nazi Germany. Yet coal hydrogenation never produced gasoline that could compete in world markets; its price was always above that of gasoline made from crude oil, and its expense precluded its use except as a tool of autarkic governments. Nevertheless it deserves a place in histories of the 20th century because without it Nazi Germany and South Africa could hardly have held on to their power as long as they did.

The hydrogenation of coal was just one of a number of hydrogenation processes that came into use in the late 19th and early 20th centuries. A second hydrogenation technology was used to make margarine mainly from nondairy fats, a vital resource at a time when dairy fat was precious. One remarkable and little-known consequence of this technology was the creation of a vast new 20th-century whaling industry. By 1914 whale oil was already being hydrogenated for margarine by the emerging great margarine firms, but by the 1930s this was its main use. The 1920s and 1930s saw a huge expansion in whaling in the South Atlantic, using large factory whaling ships. Whale oil was ultimXately used to make some 30% to 50% of all European margarine at this time. During 1931 South Atlantic whale oil production equaled French, Italian, and Spanish olive oil production combined. Mainly consumed in Germany, Britain, and Holland, the whale-oil supply was dominated by the Anglo-Dutch firm Unilever. (Today most margarine is made by hydrogenating vegetable oils.)

Unilever was forced by the new Nazi government to finance the building of a German-flagged whaling fleet, making Germany a whaling nation for the first time. The first floating factory built in Germany, the Walter Rau, named for the owner of the main German margarine firm, went to the southern oceans in the mid-1930s. In its first season it processed 1,700 whales. From these it produced 18,264 tons of whale oil, 240 tons of sperm oil, 1,024 tons of meat meal, 104 tons of canned meat, 114 tons of frozen meat, 10 tons of meat extract, 5 tons of liver meal, 22 tons of blubber fiber, and 11 tons of glands for medical experiments. By 1939 the Germans were deploying 5 owned and 2 chartered factory ships. The Japanese also went into large-scale whaling at this time. After World War II Germany was prevented from whaling for some years, and its factory ships were used by other powers. Whaling boomed, and up to 20 floating factories were operating in the Antarctic, more than ever before. But the catch never reached the peaks of the 1930s, and the industry collapsed in the early 1960s. What we have considered a 19th-century industry is better seen as a mid-20th-century industry, one so rapacious that it destroyed itself in a way its 19th-century predecessor lacked the technology to achieve.

Whaling, considered a 19th-century industry, is better seen as a mid-20th-century industry, one so rapacious that it destroyed itself in a way its 19th-century predecessor lacked the technology to achieve.

A third hydrogenation process was to have longer-lasting and much greater effects and is somewhat better known outside the ranks of historians of chemistry: the hydrogenation of nitrogen to produce ammonia, from which nitrates are derived. As an invention and innovation story it is placed before World War I, but in terms of how it changed the world it must be placed after the second world war rather than the first. For it was only then that nitrate in vast quantities became central to agriculture, such that by the end of the century one-third of the nitrogen in human food came from human-made nitrate.

The phrase green revolution is applied to the introduction of new agricultural plant varieties, fertilizers, and techniques to the developing world in the 1960s. Partly because agriculture is associated with poverty and the past and partly because of historians’ focus on novelty, an even more significant agricultural revolution in the developed world has been missed. In the developed world the years after 1945 saw a profound revolution in agriculture. Changes in the labor productivity rate, mainly owing to mechanization but also to nitrates, were greater than those in industry or services and were at a much higher level than ever before. This change resulted in enormously increased outputs combined with rapidly falling labor inputs. Land productivity also increased tremendously, such that the already large gap in productivity between the developed and the developing world widened further after World War II. In Britain, where productivity was already quite high, yields doubled in the postwar years. The later green revolution of the 1960s narrowed the gap in some parts of the developing world that this earlier green revolution had created. But, for example, Japan still leads the rest of Asia in the productivity of its rice paddies. Furthermore the United States remained a major wheat exporter, but increasingly to the developing world. It exported wheat to the Soviet Union in the 1970s and 1980s on a large scale. It remains a major producer of raw cotton, whose major export market was once Britain but is now the poor countries of the world, where the cotton spinning industry is concentrated. Of course nitrates are not the only cause; many other products of the chemical and other industries are involved. But these unheralded changes have had a profound effect.

There is of course much more to be said about the combined influence of chemistry and the chemical industry. Especially after 1945 hydrogenation technologies have been just a small part of the total of advances in chemistry. Yet in a sense we don’t have to say more—the case is made, perhaps all the more strongly because the technologies are not especially well-known.

If many of the key effects of the modern chemical industry came decades after chemistry ceased to be central to most histories of science and technology, aspects of chemistry show that many of the things we take to be recent are much older than we tend to think. It is commonly contended, for example, that close academia–industry relations are novel; indeed, many hold that the universities of the rich world have gone through a revolutionary change in the recent past. Consideration of the history of academic chemistry shows how misleading such a picture is. The joint inventors of the main process for making synthetic ammonia, Fritz Haber and Carl Bosch, were an academic and an industrialist, respectively. (Each of the men later received a Nobel Prize for their achievements.) Another important pre–World War I academic physical chemist, Walther Nernst, invented a new kind of lightbulb, the Nernst lamp, which he sold to the major German electrical company AEG. Russian-born Vladimir Ipatieff, a high pressure chemist, worked in the 1930s for both the oil industry and Northwestern University; in 1938 he and an associate patented the alkylation process, used to produce isooctane for aviation gasoline on a grand scale during the war. Other examples of this relationship abound in the history of technology.

It seems the very ubiquity of chemical invention and chemical research, the outputs of the chemical industry, have made these contributions seem rather mundane, even to historians of science and technology. Paradoxically the very success of chemical invention and research has been the undoing of its reputation in history.